Spotlight Video

Abstract

Eating is a crucial activity of daily living. Unfortunately, for the millions of people who cannot eat independently due to a disability, caregiver-assisted meals can come with feelings of self-consciousness, pressure, and being a burden. Robot-assisted feeding promises to empower people with motor impairments to feed themselves. However, research often focuses on specific system subcomponents and thus evaluates them in controlled settings. This leaves a gap in developing and evaluating an end-to-end system that feeds users entire meals in out-of-lab settings. We present such a system, collaboratively developed with community researchers. The key challenge of developing a robot feeding system for out-of-lab use is the varied off-nominal scenarios that can arise. Our key insight is that users can be empowered to overcome many off-nominals, provided customizability and control. This system improves upon the state-of-the-art with: (a) a user interface that provides substantial customizability and control; (b) general food detection; and (c) portable hardware. We evaluate the system with two studies. In Study 1, 5 users with motor impairments and 1 community researcher use the system to feed themselves meals of their choice in a cafeteria, office, or conference room. In Study 2, 1 community researcher uses the system in his home for 5 days, feeding himself 10 meals across diverse contexts. This resulted in 3 lessons learned: (a) spatial contexts are numerous, customizability lets users adapt to them; (b) off-nominals will arise, variable autonomy lets users overcome them; (c) assistive robots' benefits depend on context.

Study 1 Footage

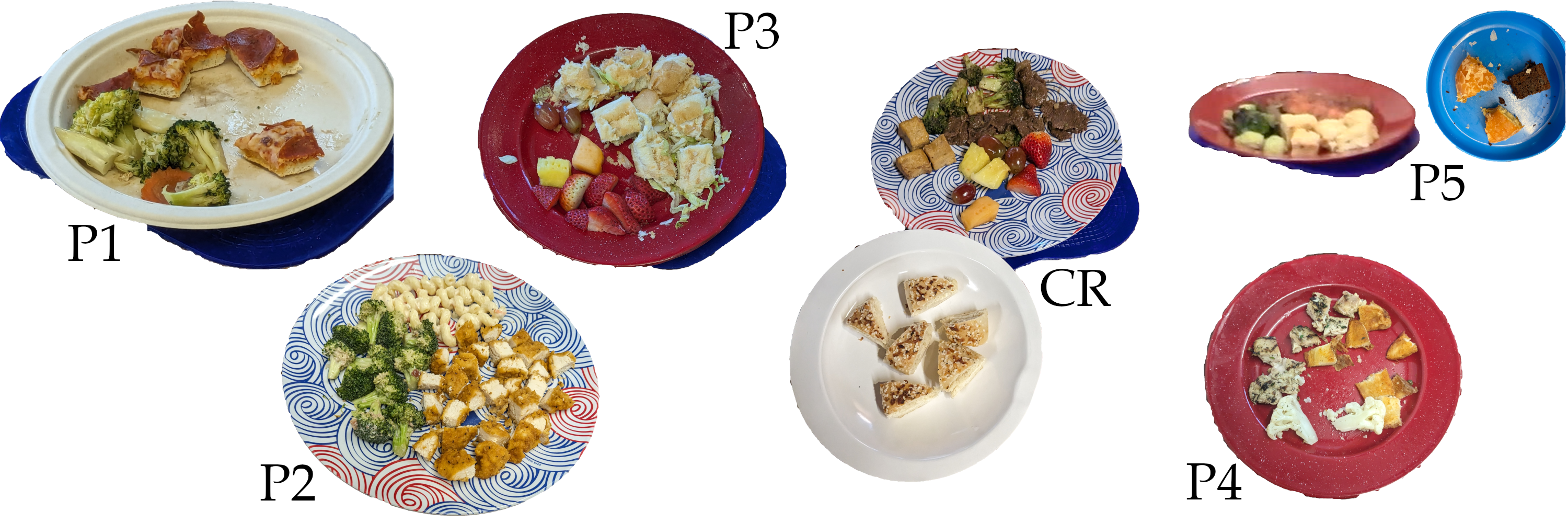

Study 1 focuses on the research question: How does the system perform across different users in out-of-lab settings? To investigate this, we invited five participants and one community researcher, all people with motor impairments, to eat a meal of their choice in a cafeteria, conference room, or office. Direct footage from the study, one bite each from each user, can be found below.

P1

(start at 3:04)

CR2

(start at 0:05)

P2

(start at 6:43)

These videos above and to the right show P1, CR2, and P2's meals. A key takeaway from these videos is the impact that assistive technology has on the user experience. Because P1 and CR2 use mouth-based assistive technology, they cannot interact with the system while chewing or talking; this contrasts with P2, who uses touch-based assistive technology (a stylus) and therefore can. Further, since P1's assistive technology is not cursor-based, is takes him much longer that CR2 or P2 to specify a target point for bite selection.

P3

(start at 5:31)

P4

(start at 1:59)

The videos above and to the right show P3, P4, and P5's meals. A key takeaway from these videos is the impact that spatial context has on the user experience. P3 sat near the front of his wheelchair whereas P4 sat near the back, resulting in a quicker bite transfer for P3. However, the staging configuration was also much closer to P3's eyes, which he felt was "weird." For P5, the relative positioning of the social diner impacted her user experience; the robot came between her and the social diner, breaking their eye contact and interrupting the social interaction.

P5

(start at 7:59)

Study 2 Footage

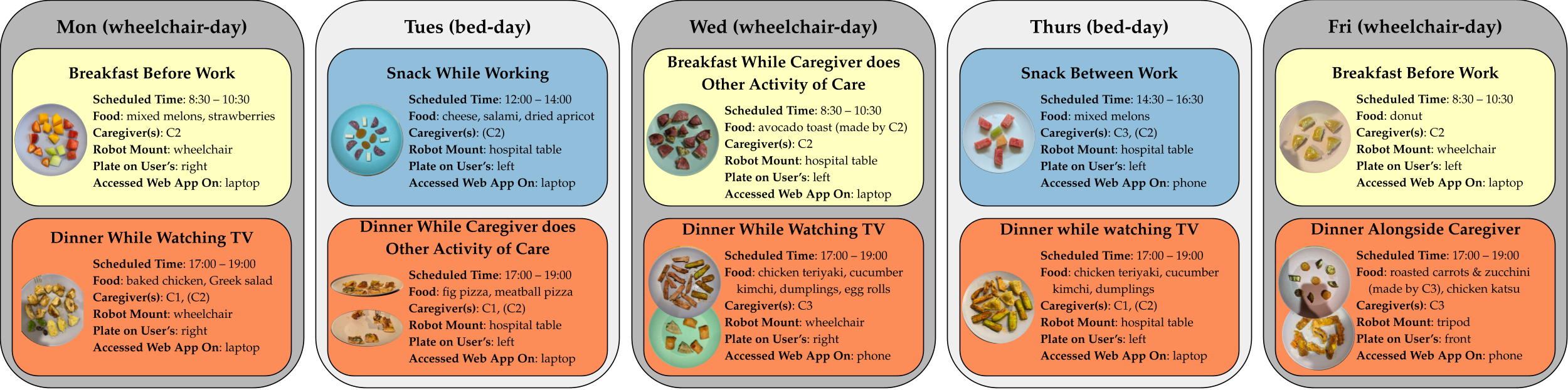

Study 2 focuses on the research question: How does the system perform across the diverse contexts that arise when eating in the home? To investigate this, we deployed the robot in CR2's home for five consecutive days, feeding him two meals per day across various spatial, social, and activity contexts.

Direct footage from the study can be found below.

Dinner While Watching TV

(start at 0:05)

Dinner While Caregiver Does Other Care Work

(start at 1:18)

A key takeaway from the above videos is the varied spatial contexts in which CR2 eats. When he is seated in his wheelchair, the robot is mounted on his right side, and one of his existing wheelchair buttons is used as the e-stop. There is a face-height hospital table in front of him with his laptop/phone and mouth joystick, which means the hospital table with his food has to be on his right. In contrast, when he is eating in bed, the robot, plate, and e-stop are all mounted on a hospital table to his left. His laptop and mouth joystick are still in front of him, but the collision-free space in front of CR2's face is narrower. Further, CR2 has less head mobility due to the bed back and the tilt of the bed. He often said the robot was "threading the needle" on bed days.